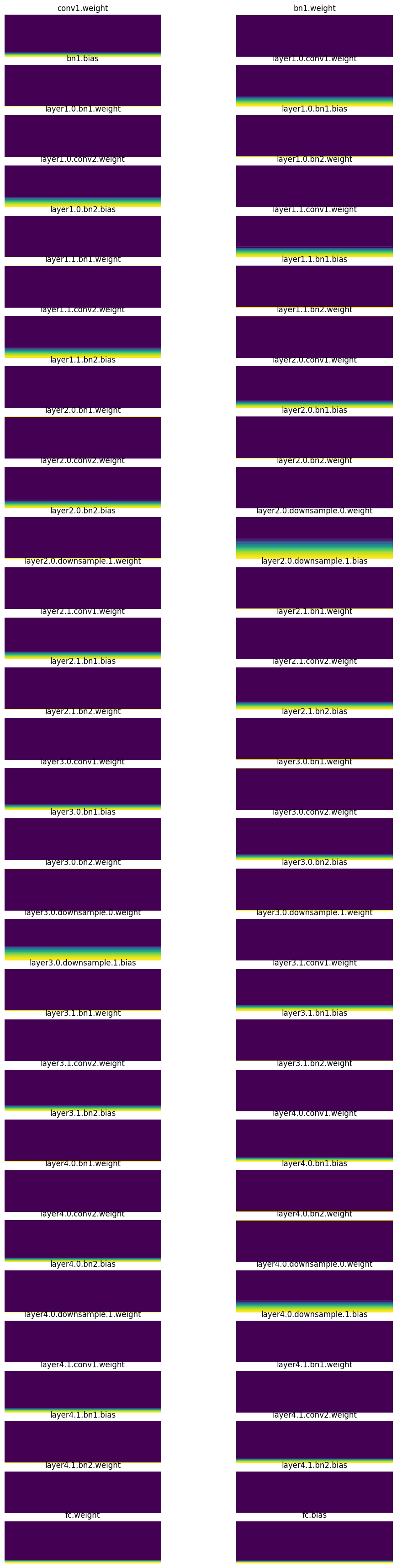

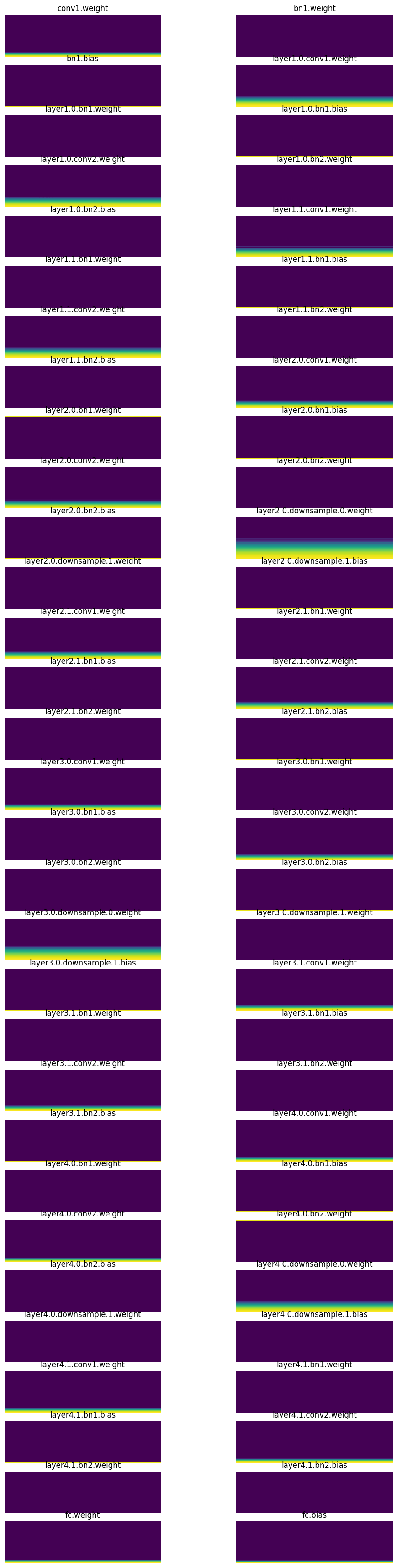

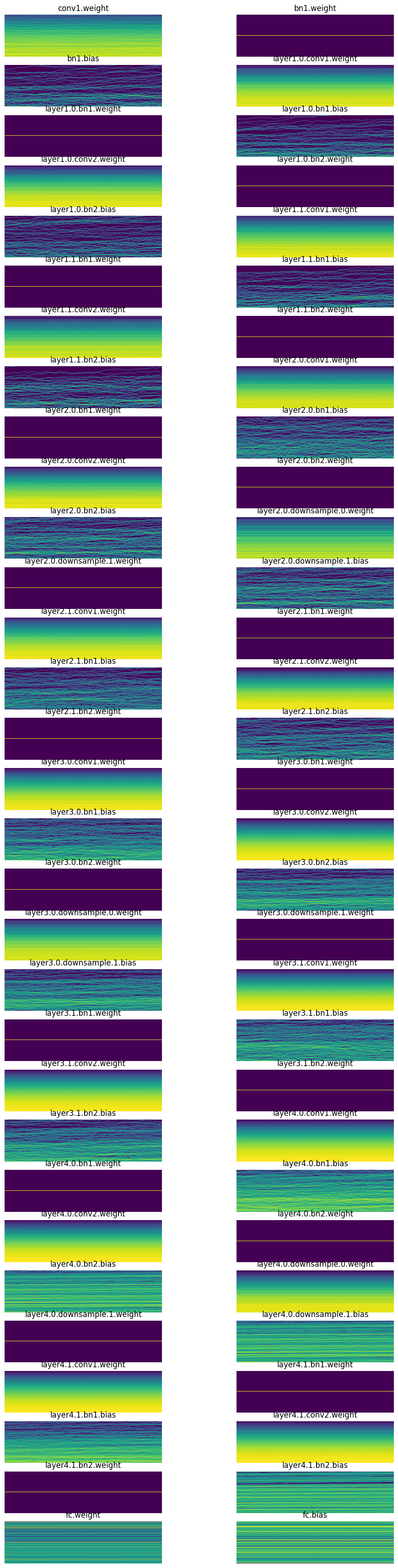

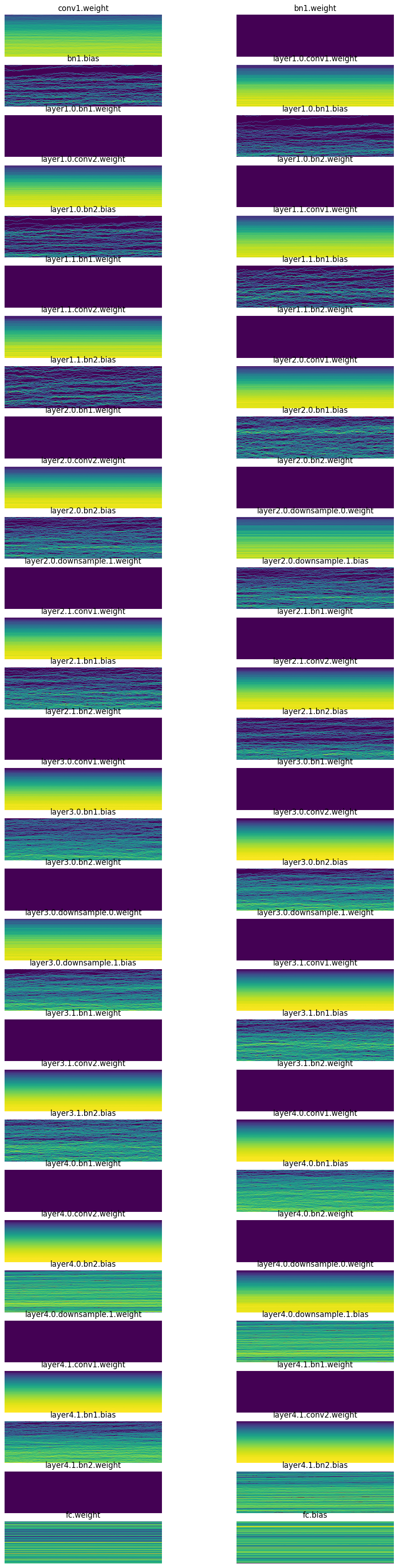

[['bn1.weight', 0.0, 0.006746089085936546, 0.0060293180868029594],

['bn1.bias',

0.004148084670305252,

0.003786720335483551,

0.003580566728487611],

['layer1.0.bn1.weight', 0.0, 0.005284080747514963, 0.005399828776717186],

['layer1.0.bn1.bias',

0.004158522468060255,

0.003640677547082305,

0.0034905048087239265],

['layer1.0.bn2.weight', 0.0, 0.005027716979384422, 0.004458598792552948],

['layer1.0.bn2.bias',

0.0027511364314705133,

0.0025208923034369946,

0.0024442975409328938],

['layer1.1.bn1.weight', 0.0, 0.00424396526068449, 0.0039586215279996395],

['layer1.1.bn1.bias',

0.002898486563935876,

0.002260061912238598,

0.002269925782456994],

['layer1.1.bn2.weight', 0.0, 0.004007537383586168, 0.0035925875417888165],

['layer1.1.bn2.bias',

0.001713279401883483,

0.0016484896186739206,

0.0014464608393609524],

['layer2.0.bn1.weight', 0.0, 0.0031620513182133436, 0.003240257501602173],

['layer2.0.bn1.bias',

0.0017084497958421707,

0.0016835747519508004,

0.0014696172438561916],

['layer2.0.bn2.weight', 0.0, 0.0031509941909462214, 0.002757459646090865],

['layer2.0.bn2.bias',

0.0018845133017748594,

0.0020461969543248415,

0.0017825027462095022],

['layer2.1.bn1.weight', 0.0, 0.003080494701862335, 0.0026580658741295338],

['layer2.1.bn1.bias',

0.0015702887903898954,

0.0016526294639334083,

0.0015195324085652828],

['layer2.1.bn2.weight', 0.0, 0.0026030924636870623, 0.002109265886247158],

['layer2.1.bn2.bias',

0.0011786026880145073,

0.0013054630253463984,

0.001151321455836296],

['layer3.0.bn1.weight', 0.0, 0.0020066993311047554, 0.001799717196263373],

['layer3.0.bn1.bias',

0.0011105844751000404,

0.0010541853262111545,

0.000998087227344513],

['layer3.0.bn2.weight', 0.0, 0.0021246818359941244, 0.0017275793943554163],

['layer3.0.bn2.bias',

0.001101263682357967,

0.0010443663923069835,

0.0009456288535147905],

['layer3.1.bn1.weight', 0.0, 0.001690064324066043, 0.001411611563526094],

['layer3.1.bn1.bias',

0.0008357313927263021,

0.0008858887013047934,

0.0007302637677639723],

['layer3.1.bn2.weight', 0.0, 0.0014081220142543316, 0.0011884564300999045],

['layer3.1.bn2.bias',

0.0006777657545171678,

0.0006280804518610239,

0.0004925086977891624],

['layer4.0.bn1.weight', 0.0, 0.0010809964733198285, 0.0009125272044911981],

['layer4.0.bn1.bias',

0.0005735242739319801,

0.0005982829607091844,

0.0004978459910489619],

['layer4.0.bn2.weight', 0.0, 0.0018873803783208132, 0.0017549480544403195],

['layer4.0.bn2.bias',

0.002376189222559333,

0.0023989235050976276,

0.002254981081932783],

['layer4.1.bn1.weight', 0.0, 0.0010339637519791722, 0.0008248933590948582],

['layer4.1.bn1.bias',

0.0006004928727634251,

0.0006056932033970952,

0.0004860978224314749],

['layer4.1.bn2.weight', 0.0, 0.002108693355694413, 0.00195809337310493],

['layer4.1.bn2.bias',

0.0034636915661394596,

0.0034976028837263584,

0.003284711856395006]]